Hongzheng Chen Blog

HLS for Deep Learning Applications

Oct 15th, 2020 0本文记录几个面向深度神经网络(DNN)的高层次综合系统,包括DNNBuilder [ICCAD’18]和FlexCNN [FPGA’20]。

DNNBuilder & FlexCNN

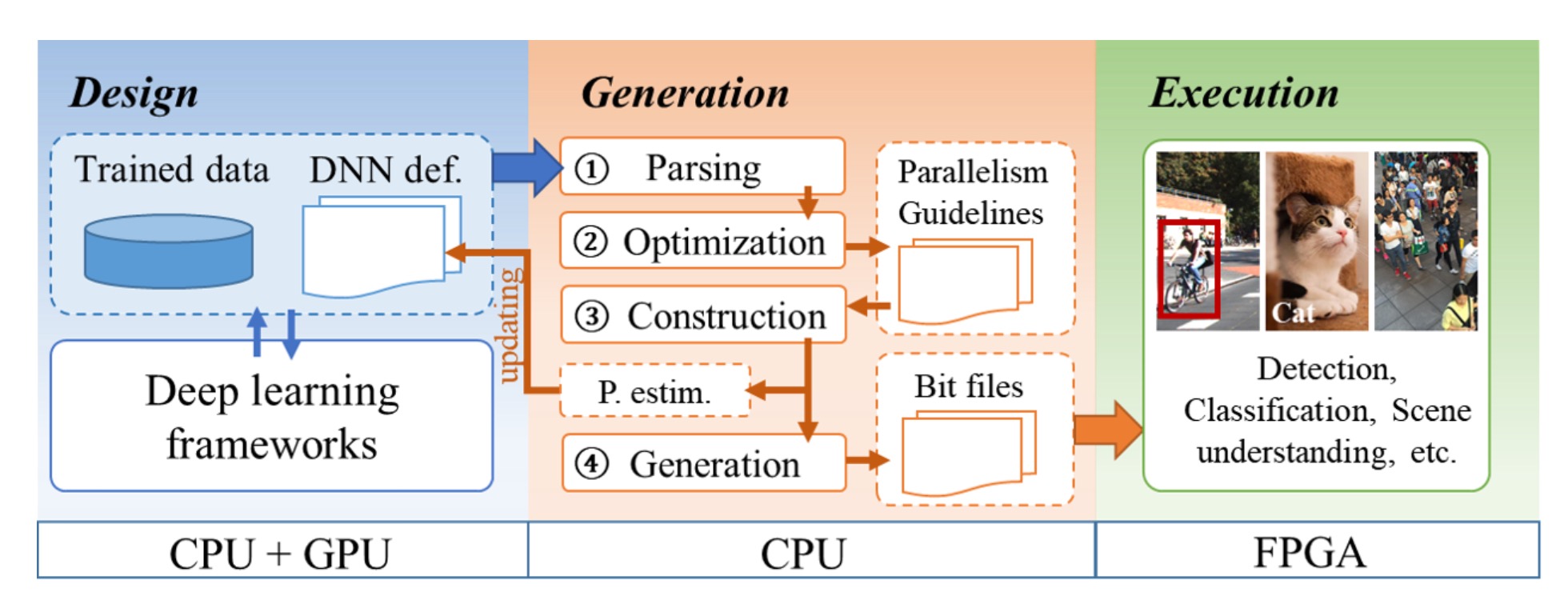

编译流程

DNNBuilder1前端接收Caffe的网络架构,后端输出RTL代码;FlexCNN 2前端接收Tensorflow的网络架构,后端输出C HLS代码。

FlexCNN考虑到不同layer的动态分块(tiling),并且做成了end-to-end的编译运行系统,同时将host-to-device的通信开销也进行优化。

两者都使用了设计空间探索(DSE),对分块大小、缓存大小等进行优化。

硬件设计

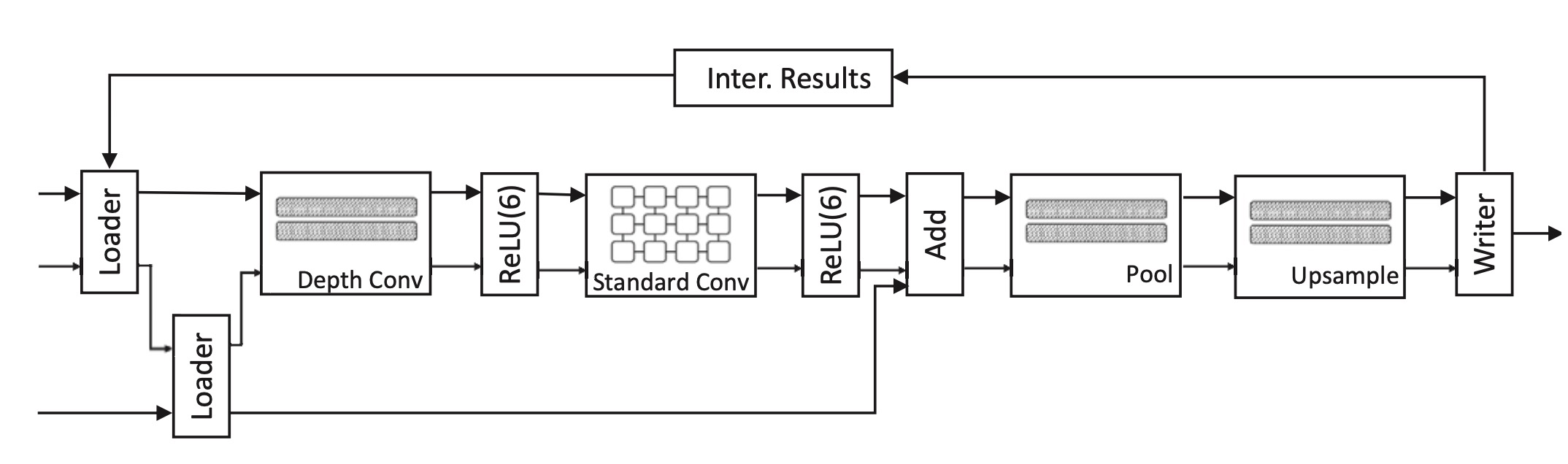

DNNBuilder用了流水线架构,提供参数化RTL模版方便上层框架进行配置。

FlexCNN的架构类似于CPU,还添加了指令单元,对参数化模版进行配置。同时不同的卷积实现方式不同,如标准卷积直接采用systolic array。

SOFF

SOFF3是通用的HLS系统,在此暂时不作详细介绍。

Reference

-

Xiaofan Zhang, Junsong Wang, Chao Zhu, Yonghua Lin, Jinjun Xiong, Wen-mei Hwu, and Deming Chen (UIUC), DNNBuilder: an automated tool for building high-performance DNN hardware accelerators for FPGAs, ICCAD, 2018 ↩

-

Atefeh Sohrabizadeh, Jie Wang, Jason Cong (UCLA), End-to-End Optimization of Deep Learning Applications, FPGA, 2020 (Code) ↩

-

Gangwon Jo, Heehoon Kim, Jeesoo Lee, and Jaejin Lee (Seoul National University), SOFF: An OpenCL High-Level Synthesis Framework for FPGAs, ISCA, 2020 ↩